information theory

papers that measure information flow

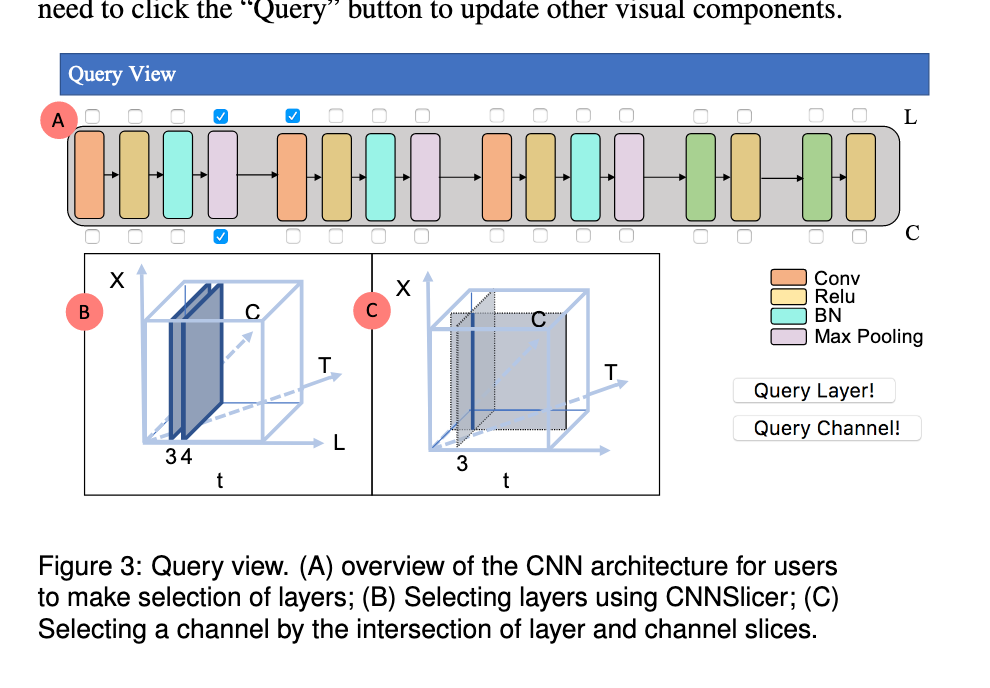

An Information-theoretic Visual Analysis Framework for Convolutional Neural Networks

-

Uses CNN’s, but measures entropy directly instead of trying to measure Mutual Information

Information flows of diverse autoencoders

Generalization Bounds for Deep learning

Information Foraging Theory for Programmers

- Programmers “seek” out different type of information “diets”

- https://web.eecs.utk.edu/~azh/blog/informationforaging.html

- These can be modeled in some form

- https://alexanderell.is/posts/visualizing-code/

Information foraging for religion

- Most people go to majlis for information (and need to therefore find religious authorities convincing)

Mutual Information

- https://en.wikipedia.org/wiki/Mutual_information

- https://www.youtube.com/watch?v=U9h1xkNELvY

- https://arxiv.org/pdf/1905.06922.pdf <- variational bounds

- ON NETWORK SCIENCE AND MUTUAL INFORMATION FOR EXPLAINING DEEP NEURAL NETWORKS - https://arxiv.org/pdf/1901.08557.pdf

- mutual information neural estimation - https://arxiv.org/pdf/1801.04062.pdf

Algorithmic Information Theory

- https://en.wikipedia.org/wiki/Algorithmic_information_theory#cite_note-2

- https://www.cs.auckland.ac.nz/research/groups/CDMTCS/docs/ait.php

- https://dl.acm.org/doi/10.1145/321892.321894

Information-Theoretic Probing with MDL

https://arxiv.org/abs/2003.12298

Solomonoff Theory of Inductive Inference

- https://www.lesswrong.com/posts/Kyc5dFDzBg4WccrbK/an-intuitive-explanation-of-solomonoff-induction#formalized_science

- https://old.reddit.com/r/artificial/comments/gctich/eli5_what_is_solomonoff_induction/

- https://old.reddit.com/r/MachineLearning/comments/6m38t2/p_attempted_implementation_of_solomonoff_induction/

Information Bottleneck

- related to Scaling laws for solution compressibility

- https://www.youtube.com/watch?v=RKvS958AqGY&t=2249s

- https://www.youtube.com/watch?v=bLqJHjXihK8&t=1482s

Predictive information in RNN’s

Information Bottleneck Theory Based Exploration of Cascade Learning

Information bottleneck thesis:

Information Theory Course

Chapter 1

- “The Mathematical Theory of Communication”

- Fundalmental limits of communication

- information is uncertainty -> information is modeled as a random variable

- uncertainty with information source, aka the information source is noisy

- information is digital and can be modeled as bits

- information is uncertainty -> information is modeled as a random variable

- two fundalmental theorems

- source coding theorem: establishes fundalmental limits in data compression

- there is always a minimum size that a file can be compressed

- channel coding theorem: fundalmental limit for reliable communication through a noisy channel

- also called “channel capacity”

- source coding theorem: establishes fundalmental limits in data compression